Solving actor-isolated protocol conformance related errors in Swift 6.2

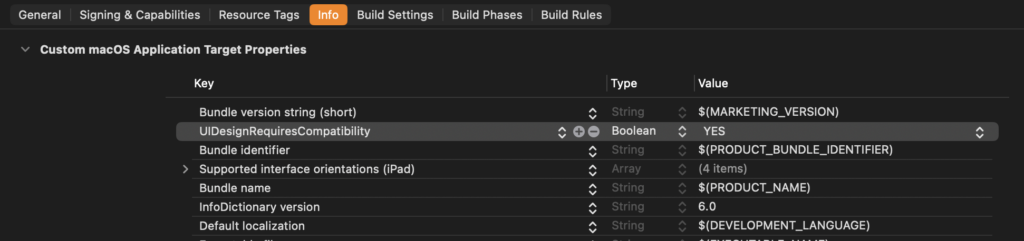

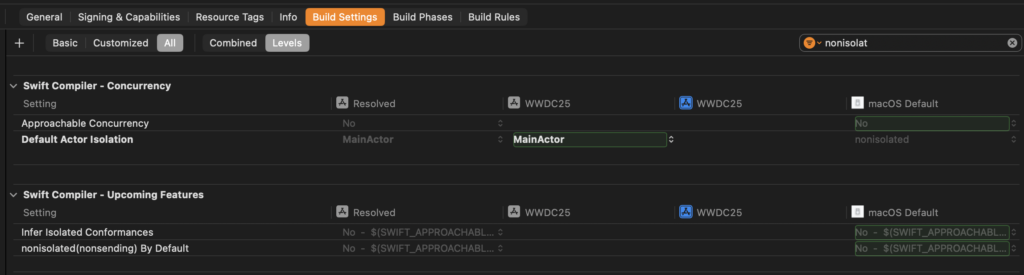

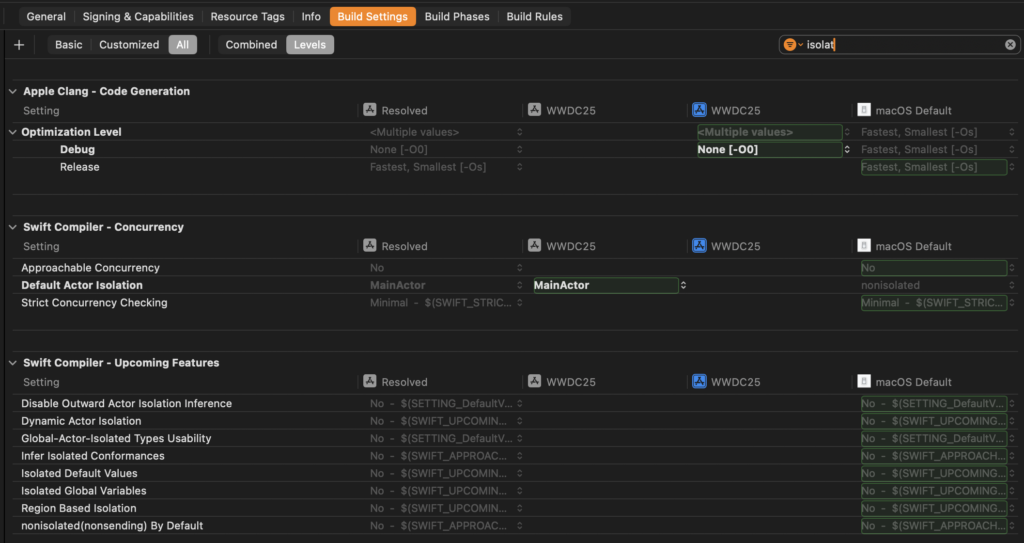

Swift 6.2 comes with several quality of life improvements for concurrency. One of these features is the ability to have actor-isolated conformances to protocols. Another feature is that your code will now run on the main actor by default.

This does mean that sometimes, you’ll run into compiler errors. In this blog post, I’ll explore these errors, and how you can fix them when you do.

Before we do, let’s briefly talk about actor-isolated protocol conformance to understand what this feature is about.

Understanding actor-isolated protocol conformance

Protocols in Swift can require certain functions or properties to be nonisolated. For example, we can define a protocol that requires a nonisolated var name like this:

protocol MyProtocol {

nonisolated var name: String { get }

}

class MyModelType: MyProtocol {

var name: String

init(name: String) {

self.name = name

}

}Our code will not compile at the moment with the following error:

Conformance of 'MyModelType' to protocol 'MyProtocol' crosses into main actor-isolated code and can cause data racesIn other words, our MyModelType is isolated to the main actor and our name protocol conformance isn’t. This means that using MyProtocol and its name in a nonisolated way, can lead to data races because name isn’t actually nonisolated.

When you encounter an error like this you have two options:

- Embrace the

nonisolatednature ofname - Isolate your conformance to the main actor

The first solution usually means that you don’t just make your property nonisolated, but you apply this to your entire type:

nonisolated class MyModelType: MyProtocol {

// ...

}This might work but you’re now breaking out of main actor isolation and potentially opening yourself up to new data races and compiler errors.

When your code runs on the main actor by default, going nonisolated is often not what you want; everything else is still on main so it makes sense for MyModelType to stay there too.

In this case, we can mark our MyProtocol conformance as @MainActor:

class MyModelType: @MainActor MyProtocol {

// ...

}By doing this, MyModelType conforms to my protocol but only when we’re on the main actor. This automatically makes the nonisolated requirement for name pointless because we’re always going to be on the main actor when we’re using MyModelType as a MyProtocol.

This is incredibly useful in apps that are main actor by default because you don’t want your main actor types to have nonisolated properties or functions (usually). So conforming to protocols on the main actor makes a lot of sense in this case.

Now let’s look at some errors related to this feature, shall we? I initially encountered an error around my SwiftData code, so let’s start there.

Fixing Main actor-isolated conformance to 'PersistentModel' cannot be used in actor-isolated context

Let’s dig right into an example of what can happen when you’re using SwiftData and a custom model actor. The following model and model actor produce a compiler error that reads “Main actor-isolated conformance of 'Exercise' to 'PersistentModel' cannot be used in actor-isolated context”:

@Model

class Exercise {

var name: String

var date: Date

init(name: String, date: Date) {

self.name = name

self.date = date

}

}

@ModelActor

actor BackgroundActor {

func example() {

// Call to main actor-isolated initializer 'init(name:date:)' in a synchronous actor-isolated context

let exercise = Exercise(name: "Running", date: Date())

// Main actor-isolated conformance of 'Exercise' to 'PersistentModel' cannot be used in actor-isolated context

modelContext.insert(exercise)

}

}There’s actually a second error here too because we’re calling the initializer for exercise from our BackgroundActor and the init for our Exercise is isolated to the main actor by default.

Fixing our problem in this case means that we need to allow Exercise to be created and used from non-main actor contexts. To do this, we can mark the SwiftData model as nonisolated:

@Model

nonisolated class Exercise {

var name: String

var date: Date

init(name: String, date: Date) {

self.name = name

self.date = date

}

}Doing this will make both the init and our conformance to PersistentModel nonisolated which means we’re free to use Exercise from non-main actor contexts.

Note that this does not mean that Exercise can safely be passed from one actor or isolation context to the other. It just means that we’re free to create and use Exercise instances away from the main actor.

Not every app will need this or encounter this, especially when you’re running code on the main actor by default. If you do encounter this problem for SwiftData models, you should probably isolate the problematic are to the main actor unless you specifically created a model actor in the background.

Let’s take a look at a second error that, as far as I’ve seen is pretty common right now in the Xcode 26 beta; using Codable objects with default actor isolation.

Fixing Conformance of protocol 'Encodable' crosses into main actor-isolated code and can cause data races

This error is quite interesting and I wonder whether it’s something Apple can and should fix during the beta cycle. That said, as of Beta 2 you might run into this error for models that conform to Codable. Let’s look at a simple model:

struct Sample: Codable {

var name: String

}This model has two compiler errors:

- Circular reference

- Conformance of 'Sample' to protocol 'Encodable' crosses into main actor-isolated code and can cause data races

I’m not exactly sure why we’re seeing the first error. I think this is a bug because it makes no sense to me at the moment.

The second error says that our Encodable conformance “crossed into main actor-isolated code”. If you dig a bit deeper, you’ll see the following error as an explanation for this: “Main actor-isolated instance method 'encode(to:)' cannot satisfy nonisolated requirement”.

In other words, our protocol conformance adds a main actor isolated implementation of encode(to:) while the protocol requires this method to be non-isolated.

The reason we’re seeing this error is not entirely clear to me but there seems to be a mismatch between our protocol conformance’s isolation and our Sample type.

We can do one of two things here; we can either make our model nonisolated or constrain our Codable conformance to the main actor.

nonisolated struct Sample: Codable {

var name: String

}

// or

struct Sample: @MainActor Codable {

var name: String

}The former will make it so that everything on our Sample is nonisolated and can be used from any isolation context. The second option makes it so that our Sample conforms to Codable but only on the main actor:

func createSampleOnMain() {

// this is fine

let sample = Sample(name: "Sample Instance")

let data = try? JSONEncoder().encode(sample)

let decoded = try? JSONDecoder().decode(Sample.self, from: data ?? Data())

print(decoded)

}

nonisolated func createSampleFromNonIsolated() {

// this is not fine

let sample = Sample(name: "Sample Instance")

// Main actor-isolated conformance of 'Sample' to 'Encodable' cannot be used in nonisolated context

let data = try? JSONEncoder().encode(sample)

// Main actor-isolated conformance of 'Sample' to 'Decodable' cannot be used in nonisolated context

let decoded = try? JSONDecoder().decode(Sample.self, from: data ?? Data())

print(decoded)

}So generally speaking, you don’t want your protocol conformance to be isolated to the main actor for your Codable models if you’re decoding them on a background thread. If your models are relatively small, it’s likely perfectly acceptable for you to be decoding and encoding on the main actor. These operations should be fast enough in most cases, and sticking with main actor code makes your program easier to reason about.

The best solution will depend on your app, your constraints, and your requirements. Always measure your assumptions when possible and stick with solutions that work for you; don’t introduce concurrency “just to be sure”. If you find that your app benefits from decoding data on a background thread, the solution for you is to mark your type as nonisolated; if you find no direct benefits from background decoding and encoding in your app you should constrain your conformance to @MainActor.

If you’ve implemented a custom encoding or decoding strategy, you might be running into a different error…

Conformance of 'CodingKeys' to protocol 'CodingKey' crosses into main actor-isolated code and can cause data races

Now, this one is a little trickier. When we have a custom encoder or decoder, we might also want to provide a CodingKeys enum:

struct Sample: @MainActor Decodable {

var name: String

// Conformance of 'Sample.CodingKeys' to protocol 'CodingKey' crosses into main actor-isolated code and can cause data races

enum CodingKeys: CodingKey {

case name

}

init(from decoder: any Decoder) throws {

let container = try decoder.container(keyedBy: CodingKeys.self)

self.name = try container.decode(String.self, forKey: .name)

}

}Unfortunately, this code produces an error. Our conformance to CodingKey crosses into main actor isolated code and that might cause data races. Usually this would mean that we can constraint our conformance to the main actor and this would solve our issue:

// Main actor-isolated conformance of 'Sample.CodingKeys' to 'CustomDebugStringConvertible' cannot satisfy conformance requirement for a 'Sendable' type parameter 'Self'

enum CodingKeys: @MainActor CodingKey {

case name

}This unfortunately doesn’t work because CodingKeys requires us to be CustomDebugStringConvertable which requires a Sendable Self.

Marking our conformance to main actor should mean that both CodingKeys and CodingKey are Sendable but because the CustomDebugStringConvertible is defined on CodingKey I think our @MainActor isolation doesn’t carry over.

This might also be a rough edge or bug in the beta; I’m not sure.

That said, we can fix this error by making our CodingKeys nonisolated:

struct Sample: @MainActor Decodable {

var name: String

nonisolated enum CodingKeys: CodingKey {

case name

}

init(from decoder: any Decoder) throws {

let container = try decoder.container(keyedBy: CodingKeys.self)

self.name = try container.decode(String.self, forKey: .name)

}

}This code works perfectly fine both when Sample is nonisolated and when Decodable is isolated to the main actor.

Both this issue and the previous one feel like compiler errors, so if these get resolved during Xcode 26’s beta cycle I will make sure to come back and update this article.

If you’ve encountered errors related to actor-isolated protocol conformance yourself, I’d love to hear about them. It’s an interesting feature and I’m trying to figure out how exactly it fits into the way I write code.